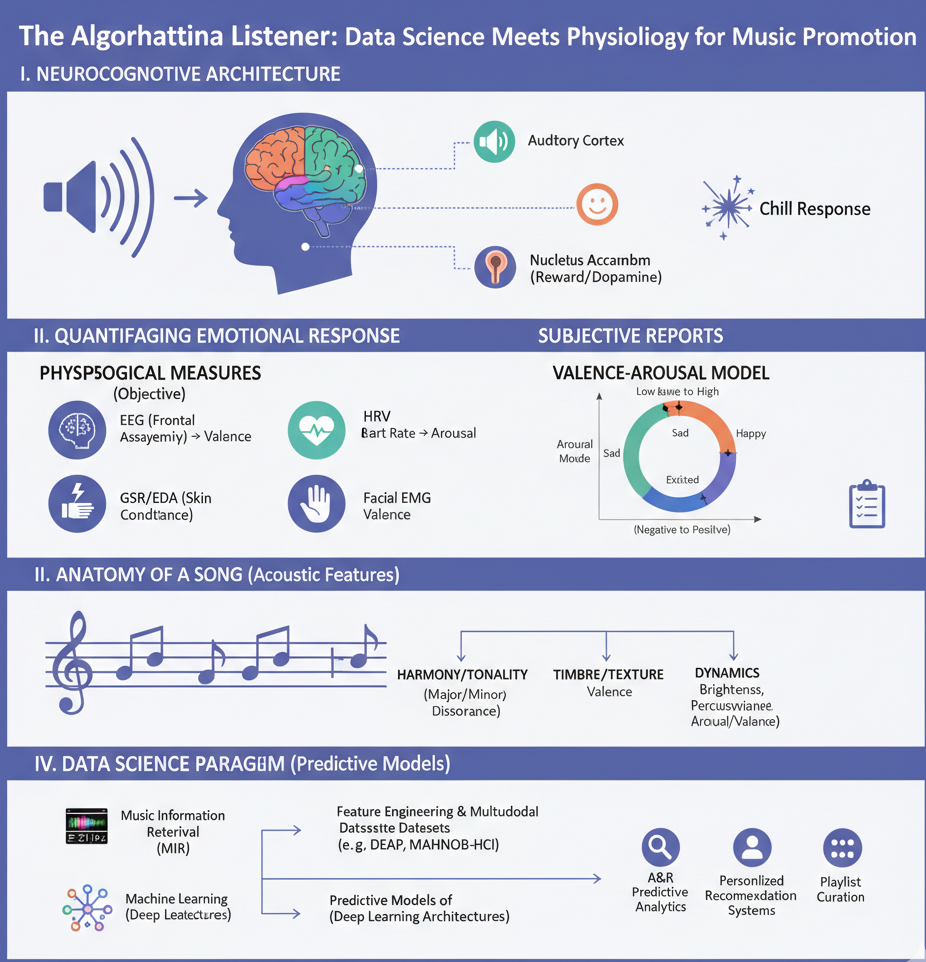

This paper presents a comprehensive synthesis of research from cognitive neuroscience, psychophysiology, and data science to construct a modern framework for understanding the mechanisms behind music promotion. We begin by delineating the neurocognitive architecture of music perception, tracing the pathway from auditory stimulus to complex emotional experience, with a focus on the brain’s reward circuitry. We then review the psychophysiological methods (e.g., EEG, fMRI, GSR, HRV) used to objectively quantify these emotional responses, linking them to the dimensional model of affect (valence and arousal).

Introduction: The New Science of Musical Appeal

The fundamental challenge in music promotion is to connect a musical work with an audience that will find it engaging and emotionally resonant. For decades, this process has been guided by the intuition of A&R executives, radio programmers, and other cultural tastemakers. However, the contemporary digital landscape demands a more precise and scalable approach. This paper addresses a central question: Can the intuitive art of music promotion be augmented, or even partially supplanted, by a scientific, predictive model of musical appeal? Answering this requires reframing the listener not merely as a consumer with subjective tastes, but as a biological system whose cognitive and physiological responses to auditory stimuli can be measured, modeled, and, to a significant degree, predicted.1 The foundation for such a model lies at the convergence of three distinct but complementary scientific fields. First, cognitive neuroscience provides the “hardware” schematic of the human brain, mapping the neural pathways that process musical information and detailing the structures involved in generating pleasure, emotion, and memory.4 Second, psychophysiology offers the methodological tools to measure the “software” output—the real-time emotional and physiological reactions of a listener, such as changes in heart rate, skin conductance, and brainwave activity.8 These measurements provide an objective, quantifiable proxy for the subjective experience of listening to music. Third, data science, encompassing the sub-fields of Music Information Retrieval (MIR) and Affective Computing, supplies the analytical framework. It provides the techniques to deconstruct a musical stimulus into a vector of quantifiable features and to build computational models that link these features to the measured physiological and emotional responses.12 This paper will demonstrate that by integrating physiological insights into the human response to music with advanced computational models, a robust, data-driven framework for understanding, predicting, and ultimately enhancing the effectiveness of music promotion can be established. This interdisciplinary synthesis transforms the abstract concept of “musical appeal” into a quantifiable and modelable phenomenon, paving the way for a new, empirical science of musical engagement.

Section I: The Neurocognitive Architecture of Music Perception

To understand how music promotion can be optimized, one must first understand the biological machinery that music engages. Music is not simply a cultural artifact; it is a uniquely powerful stimulus that co-opts and stimulates fundamental neural systems responsible for perception, cognition, emotion, and reward. This section establishes the physiological foundation for music’s profound impact on the human listener.

1.1 Decoding Sound: From the Cochlea to the Auditory Cortex

The journey of music perception begins with the physical transduction of sound waves into neural signals. Air vibrations are converted into electrical impulses by the cochlea, a spiral-shaped cavity in the inner ear that maintains a tonotopic map, where different frequencies activate dedicated regions along its structure.2 This frequency-specific information is then transmitted via the auditory nerve through several synaptic layers in the auditory brainstem to the primary auditory cortex (Heschl’s gyrus), located in the temporal lobe.2 This initial stage of hierarchical processing deconstructs the sound into its basic acoustic features, forming the foundation upon which all subsequent cognitive and emotional interpretations are built.4

1.2 Neural Correlates of Musical Elements

The brain does not process music as a single, monolithic entity. Instead, neuroimaging studies reveal that it is deconstructed into its constituent elements, with specialized and often lateralized neural networks dedicated to processing each component. This functional specialization means that a song’s “catchiness” is not a singular quality but a composite of parallel processing streams, each offering a potential “hook” for the listener.

Pitch and Melody: The perception of pitch and the contour of a melody involves a distributed network that includes the superior temporal gyrus (STG) and the planum temporale, with a notable right-hemisphere preference for processing melodic shape and resolving finer pitch distinctions.4 The brain is highly adept at tracking melodic patterns and automatically detects anomalies, such as an out-of-key note, generating a specific neural signature known as an error-related negativity.6

Rhythm and Tempo: The processing of rhythm is a multisensory task, engaging not only auditory regions but also motor networks. Key areas include the left frontal cortex, left parietal cortex, and the right cerebellum.6 The compelling sensation of a “beat” or “groove” arises from a process of neural entrainment, where oscillations in the brain’s auditory and motor cortices synchronize with the musical rhythm.15 The putamen (basal ganglia) is also critical for processing rhythm and regulating the body’s movement in response to it.17

Harmony and Tonality: The brain distinguishes between the structural rules of music (syntax) and its meaning (semantics). The processing of musical syntax, such as the predictable progression of chords in a key, activates regions like the inferior frontolateral cortex. In contrast, the processing of musical semantics appears to engage posterior temporal regions, which are involved in deriving meaning from the harmonic context.18

Timbre: Timbre, the unique “color” or quality of a sound that distinguishes a violin from a piano playing the same note, is processed via a sophisticated dual-stream model. A ventral auditory stream, encompassing the anterior STG, is primarily involved in sound identification and decoding emotional cues from timbre. Concurrently, a dorsal stream, including the posterior STG and inferior parietal lobe, is responsible for sequential processing and recognizing the actions that produce the sound.19 This dual analysis demonstrates that the brain processes even the texture of a sound for both its identity and its structural role in a sequence.

1.3 The Role of Memory, Attention, and Expectancy

A musical experience is not a passive reception of sound but an active cognitive process profoundly shaped by memory and prediction. The brain leverages past experiences to form expectations about what will come next, and the interplay between expectation and reality is a primary driver of musical emotion.

Musical memory is multifaceted, involving distinct neural systems for different types of knowledge. The brain differentiates between semantic memory, which includes factual knowledge about music and a sense of familiarity with a song, and episodic memory, which links a piece of music to specific personal experiences.4 Neuroimaging studies show that musical semantic memory activates a network including the left anterior temporal and inferior frontal regions, whereas the recall of musical episodic memories preferentially engages the middle and superior frontal gyri and the precuneus, with a right-hemisphere predominance.6

Building on this foundation of memory, the brain operates as a predictive machine, constantly generating hypotheses about upcoming musical events.15 This process of forming expectations is fundamental to music cognition. The subsequent confirmation, delay, or violation of these expectations is a key mechanism through which music generates tension, release, surprise, and pleasure.4 For formal treatments of expectation in music cognition, see Pearce & Rohrmeier.

1.4 The Brain’s Reward System: Dopamine and the “Chill” Response

The most compelling evidence for music’s biological power lies in its ability to activate the brain’s core reward circuitry. Listening to pleasurable music, especially during peak emotional moments often described as “chills” or “shivers down the spine,” triggers the release of the neurotransmitter dopamine in key reward centers, including the nucleus accumbens and the ventral striatum.10 This is the very same neural system implicated in the pleasure derived from primary rewards like food and sex, as well as from addictive substances, highlighting music’s capacity to act as a potent neurochemical stimulus.3 For overviews, see Music and the brain: the neuroscience of music and musical appreciation and Your Brain on Music.

The emotional power of music is thus fundamentally tied to this interplay between predictive processing and reward. The most potent emotional responses are often generated not by the sounds themselves, but by the relationship between expected and actual sounds. This neurochemical process provides a causal chain: a song’s structure creates expectations; the brain’s response to the fulfillment or violation of these expectations modulates dopamine release; and this neurochemical event is subjectively experienced as pleasure, tension, or surprise. This mechanism is transformative for understanding musical engagement, as it suggests that the most successful music is that which masterfully balances predictability with novelty, thereby optimally manipulating the brain’s expectancy-reward cycle.

Further research has revealed a sophisticated temporal dynamic in this process. Dopamine is released not only during the peak emotional experience itself but also in anticipation of it. The dorsal striatum shows increased activity during the anticipatory phase (the “wanting”), while the ventral striatum is most active during the peak consummatory experience (the “liking”).10 This dual process of anticipation and reward is a critical physiological mechanism underpinning sustained musical engagement.

| Brain Region | Primary Function in Music Processing |

|---|---|

| Auditory Cortex (Temporal Lobe) | Initial processing of acoustic features (pitch, loudness, timbre). The right hemisphere is specialized for melody, pitch, and timbre perception.4 |

| Frontal Lobe (Prefrontal Cortex) | Higher-order cognitive functions: thinking, decision-making, planning, and processing musical structure and syntax.6 |

| Parietal Lobe | Integration of sensory information; involved in musical attention and memory, particularly episodic memory recall (precuneus).4 |

| Cerebellum | Coordination of movement and timing; crucial for processing rhythm and the body’s motor response to music.6 |

| Amygdala | Processes and triggers emotions, particularly fear and pleasure; activated during intense emotional responses to music, such as “chills”.17 |

| Hippocampus | Essential for memory formation and retrieval; links music to personal (episodic) memories and regulates emotional responses.6 |

| Nucleus Accumbens | A core component of the brain’s reward system; releases dopamine in response to pleasurable music, creating feelings of pleasure and reinforcement.10 |

| Putamen | Involved in processing rhythm and regulating body movement and coordination; activated by rhythmic music.17 |

Section II: Quantifying the Emotional Response: Psychophysiological and Subjective Measurement

To build predictive models of musical appeal, it is necessary to move beyond the unobservable neural events in the brain and capture their measurable manifestations. Psychophysiology provides the methodologies to objectively quantify a listener’s internal state in real time. These objective measures, when combined with subjective self-reports, form the empirical ground truth upon which computational models of music emotion are built.

2.1 The Dimensional Model of Emotion: Valence and Arousal

While everyday language describes emotions using discrete categories like “happy,” “sad,” or “angry,” much of the scientific literature models affect along two continuous, orthogonal dimensions. This valence-arousal model is highly advantageous for computational analysis, as it transforms subjective feeling states into a quantifiable, two-dimensional coordinate space.22

Valence represents the pleasantness of an emotional state, ranging from positive (e.g., happy, elated) to negative (e.g., sad, stressed).11

Arousal represents the intensity or energy level of the state, ranging from low (e.g., calm, bored) to high (e.g., excited, agitated).11

This framework allows researchers to map any emotional state as a point in this 2D space, making it amenable to the regression and classification tasks common in machine learning.22

2.2 Central Nervous System (CNS) Measures

Direct measures of brain activity provide the most proximate data on cognitive and emotional processing.

Electroencephalography (EEG): This non-invasive technique uses scalp electrodes to measure the brain’s electrical activity. Its primary advantage is its excellent temporal resolution (on the order of milliseconds), making it ideal for tracking the brain’s rapid, dynamic responses to musical events.8 A key feature derived from EEG data is frontal alpha asymmetry, which compares the power of alpha-band oscillations ($8-13$ Hz) between the left and right frontal lobes. A relative increase in left-frontal activity is often associated with positive valence and approach-oriented motivation, providing a potential neural marker for musical pleasure.10 See Frontiers: Frontal Brain Asymmetry & Musical Change.

Functional Magnetic Resonance Imaging (fMRI): In contrast to EEG, fMRI offers excellent spatial resolution by measuring changes in blood flow, allowing researchers to pinpoint which specific brain structures are activated during music listening.5 However, its poor temporal resolution (on the order of seconds) and the loud, restrictive environment of the scanner present significant challenges for studying the dynamic and nuanced experience of music.27

The choice of measurement technology thus involves a critical trade-off. Lab-based fMRI and high-density EEG studies are invaluable for establishing the fundamental neural mechanisms of music perception. These foundational findings can then be used to validate and interpret data from more scalable and ecologically valid wearable sensors, creating a research pipeline that extends from basic science to applied, “in-the-wild” market research.

2.3 Autonomic Nervous System (ANS) Measures

The ANS regulates involuntary bodily functions and its activity provides reliable, objective indicators of emotional arousal. These signals can be captured non-invasively, often with wearable biosensors, making them suitable for more naturalistic research settings.8

Heart Rate Variability (HRV): This metric quantifies the variation in time between consecutive heartbeats. It reflects the balance between the sympathetic (“fight-or-flight”) and parasympathetic (“rest-and-digest”) branches of the ANS. Generally, higher HRV is indicative of a relaxed state (parasympathetic dominance), while lower HRV is associated with stress and higher arousal (sympathetic dominance).8 See Frontiers: Cardiac & Electrodermal Responses.

Galvanic Skin Response (GSR) / Electrodermal Activity (EDA): This is a measure of the skin’s electrical conductance, which is directly modulated by the activity of sweat glands controlled by the sympathetic nervous system. An increase in GSR/EDA is a highly sensitive and direct marker of emotional arousal.8

Other Peripheral Measures: Additional data streams include respiration rate, skin temperature, and facial electromyography (EMG), which measures muscle activity. Specifically, activity in the zygomaticus major muscle is associated with smiling (positive valence), while activity in the corrugator supercilii muscle is associated with frowning (negative valence).31

2.4 Integrating Subjective Self-Reports with Objective Physiological Data

A crucial methodological component of music emotion research is the simultaneous collection of objective physiological data and subjective self-reports of emotional experience.9 This integration is necessary because physiological signals exhibit a fundamental asymmetry in how they map to the dimensions of emotion. Measures of ANS activity, such as GSR and HRV, are excellent and robust indicators of the arousal dimension.11 However, they are often poor at distinguishing the valence of that arousal; for instance, the high arousal associated with intense joy can produce a similar GSR response to the high arousal associated with fear or anger.32

To resolve this ambiguity and build a complete picture of the emotional state, a multimodal approach is essential. By combining ANS measures of arousal with CNS measures sensitive to valence (like EEG frontal asymmetry) or with facial EMG, researchers can more accurately locate the listener’s state within the two-dimensional valence-arousal space.32 This highlights that for promotional research, simply measuring a listener’s excitement (arousal) is insufficient; to understand preference (valence), one must incorporate measures sensitive to the positive or negative quality of that excitement. See Predicting musically induced emotions from physiological inputs and Modeling Music Emotion Judgments.

| Physiological Signal | Measurement | Primary Emotional Correlate | Direction of Effect |

|---|---|---|---|

| EEG | Frontal Alpha Asymmetry | Valence | Increased left-asymmetry → Positive Valence 10 |

| HRV | Inter-Beat Interval Variability | Arousal | Decreased HRV → Higher Arousal / Stress 8 |

| GSR / EDA | Skin Conductance Level (SCL) | Arousal | Increased SCL → Higher Arousal 11 |

| Facial EMG | Zygomaticus Major Activity | Valence | Increased activity → Positive Valence (Smiling) 31 |

| Facial EMG | Corrugator Supercilii Activity | Valence | Increased activity → Negative Valence (Frowning) 31 |

| Respiration | Respiration Rate | Arousal | Increased rate → Higher Arousal 36 |

Section III: The Anatomy of a Song: Linking Acoustic Structure to Affective Response

Having established the neurophysiological framework for music perception and the methods for its measurement, the focus now shifts to the stimulus itself. This section deconstructs a song into its core, computationally extractable components—its acoustic and structural features—and reviews the empirical evidence linking these features to specific affective responses. This process, known as feature engineering, is fundamental to building predictive models of music emotion. The evidence reveals a remarkable mapping where distinct sets of musical features independently influence the dimensions of valence and arousal.

3.1 Temporal Dynamics: The Impact of Tempo and Rhythm on Arousal

The temporal characteristics of music are the most powerful and consistent drivers of physiological and perceived arousal.

Tempo: Measured in beats per minute (BPM), tempo exhibits a strong, positive correlation with arousal. A large body of research demonstrates that faster tempos lead to higher self-reported arousal, increased heart rate, elevated skin conductance, and faster respiration rates.30 Conversely, slower tempos are associated with calmer, low-arousal states.39 This relationship is likely rooted in the biological principle of rhythmic entrainment, where the body’s internal rhythms synchronize with the external musical pulse (see PNAS: Music & Movement and related experimental paradigms).

Rhythm: Beyond mere speed, the characteristics of the rhythm itself are crucial. Features such as rhythmic articulation (the degree to which notes are separated, e.g., staccato vs. legato), rhythmic clarity, and accentuation significantly modulate arousal. Music characterized by sharp, clear, highly accentuated, and staccato rhythms is consistently found to induce higher physiological arousal than music with smooth, flowing, and less defined rhythms.37

3.2 Tonal and Harmonic Features: The Role of Mode, Consonance, and Complexity in Valence

While temporal features govern arousal, the tonal and harmonic content of music is the primary determinant of emotional valence.

Musical Mode: In the context of Western tonal music, the distinction between major and minor modes is one of the most robust cues for valence. The major mode is strongly and cross-culturally associated with positive emotions like happiness, while the minor mode is associated with negative emotions like sadness.11 For overview and pedagogy, see Music & Science: Emotion and Constructionist accounts.

Harmony and Consonance: The complexity and dissonance of the harmony also play a key role. Simple, consonant harmonies—where frequencies are in simple integer ratios—are broadly perceived as pleasant and are associated with high valence ratings.37 In contrast, complex and dissonant harmonies are often perceived as tense or unpleasant, corresponding to lower valence. This preference for consonance appears to have a biological basis, as even infants react positively to melodic sounds and negatively to dissonant, “noisy” sounds.36

3.3 Timbre and Texture: The Sonic Signature of Emotion

Timbre, or tone color, provides another rich layer of emotional information. It is a multidimensional attribute characterized by the spectral shape of a sound.

Spectral Features: Computationally, timbre can be described by features such as spectral centroid (related to brightness), spectral flux (rate of change in the spectrum), and roughness. Brighter, smoother timbres are often linked to positive emotions, while spectrally rough or harsh timbres can be associated with negative-valence, high-arousal emotions like anger.28 See surveys such as Audio Features for MER.

Percussiveness: A specific timbral quality related to the sharpness of a sound’s attack-decay envelope, percussiveness, has been shown to be an independent predictor of arousal. Music with higher percussiveness elicits an increase in skin conductance responses, even when tempo is controlled for.30

Musical Texture: This refers to the density and interplay of different melodic lines. Research suggests that simple, thin textures are associated with higher valence levels, whereas complex, thick harmonies and textures are appraised with lower valence.40

3.4 Expressivity and Performance: Articulation, Dynamics, and their Physiological Imprint

The emotional content of music is not solely defined by its composed structure but is also heavily influenced by the expressive choices of the performer.

Dynamics: Variations in loudness (dynamics) are a key expressive tool. Sudden changes in dynamics, such as a loud accent or a rapid crescendo, can trigger orienting responses and brain stem reflexes in the listener, leading to immediate spikes in physiological arousal.10

Performance Cues: Subtle performance nuances, such as the rate and depth of vibrato in a vocal or string performance, have been shown to correlate directly with the perceived emotional expression of the music.44

A critical finding from this body of research is that emotional responses are not merely triggered by the static, average properties of a song but are acutely sensitive to change and novelty in these acoustic features over time. Peak physiological responses frequently coincide with moments of significant musical change—the introduction of a new instrument, a sudden shift in dynamics, or an unexpected harmonic turn.10 This aligns with the predictive processing model of the brain, where a change represents a “prediction error” that commands attention and triggers an affective re-evaluation of the stimulus. This implies that a successful piece of music must possess a compelling “emotional arc,” using dynamic changes in its acoustic features to build tension, create surprise, and maintain listener engagement.

| Musical Dimension | Computationally Extractable Feature | Primary Emotional Correlate | Direction of Effect |

|---|---|---|---|

| Tempo/Rhythm | Beats Per Minute (BPM) | Arousal | Higher BPM → Higher Arousal 30 |

| Rhythmic Articulation (Staccato) | Arousal | More Staccato → Higher Arousal 37 | |

| Pulse Clarity / Rhythmic Strength | Arousal | Higher Clarity → Higher Arousal 33 | |

| Harmony/Tonality | Mode (Major/Minor) | Valence | Major Mode → Positive Valence 30 |

| Harmonic Complexity / Dissonance | Valence | Higher Dissonance → Negative Valence 37 | |

| Key Clarity | Valence | Higher Clarity → Positive Valence 46 | |

| Timbre/Texture | Spectral Centroid (Brightness) | Arousal/Valence | Higher Centroid → Higher Arousal, Positive Valence 33 |

| Percussiveness | Arousal | Higher Percussiveness → Higher Arousal 30 | |

| Roughness | Valence | Higher Roughness → Negative Valence 46 | |

| Dynamics | Root Mean Square (RMS) Energy | Arousal | Higher Energy → Higher Arousal 33 |