Executive Summary: The Year of the Grand Bargain

The year 2026 stands as the definitive watershed moment in the history of the music industry’s relationship with artificial intelligence. We have moved decisively beyond the “Wild West” era of 2023-2024—a period characterized by existential panic, rampant copyright infringement litigation, unauthorized vocal cloning, and a binary discourse that pitted “human artistry” against “machine theft.” The industry has now entered a phase of mature, institutional integration and regulated commercialization, a transition underpinned by a series of landmark “grand bargains” between major rights holders and generative AI technology firms.

While early forecasts from the mid-2020s predicted a catastrophic displacement of human creativity, the reality of 2026 is far more nuanced and economically generative. AI has not replaced the artist; rather, it has fundamentally reconstructed the infrastructure of production, distribution, and discovery. The global AI in Music market is on a trajectory to reach approximately $60.44 billion by 2034, expanding at a CAGR of 27.80%.1 This explosive growth is not merely driven by consumer-facing generative tools for hobbyists but by deep, invisible integration into professional workflows—from stem separation and automated mastering to “active listening” technologies that are reimagining the very nature of music consumption.

The prevailing theme of this report is the shift from displacement to augmentation and new asset creation. We are witnessing the birth of the “Centaur Producer”—artists who seamlessly blend human intent with machine capability—and the “Active Listener,” a consumer who no longer passively receives a static file but interacts with a dynamic, fluid musical object. This report provides an exhaustive, expert-level analysis of the state of AI in music as of early 2026. It examines the economic drivers reshaping valuations, the evolving legal frameworks in the European Union and the United States, the specific adoption behaviors across genres from K-Pop to Reggaeton, and the technological roadmap of key players like Suno, Udio, and the newly pivotal Klay Vision.

The Economic Landscape: Market Sizing, Forecasts, and Capital Flows

The economic footprint of AI in the music industry has expanded from a speculative venture capital vertical into a core revenue driver for the broader entertainment sector. The financial data for 2025-2026 indicates an aggressive acceleration in value capture, driven by the stabilization of business models that successfully bridge the gap between technological innovation and intellectual property rights and emerging AI music detectors are trying to keep up with demand. For more insights into AI music detection, check out our comprehensive guide on the best AI music checker tools for detecting AI-generated music in 2026, or dive deeper with our detailed exploration of detecting AI-generated music using a comprehensive multi-model approach.

Global Market Valuations and Growth Trajectories

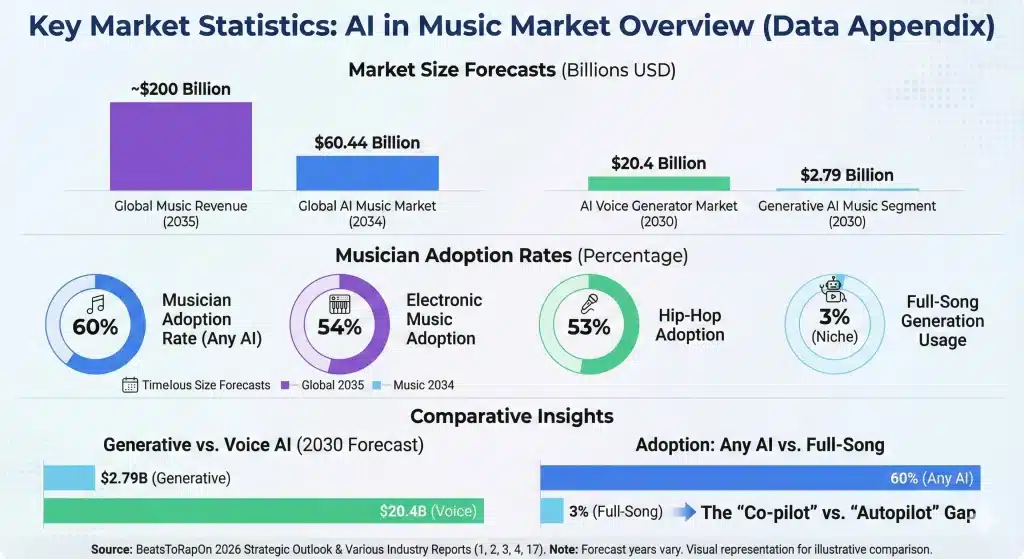

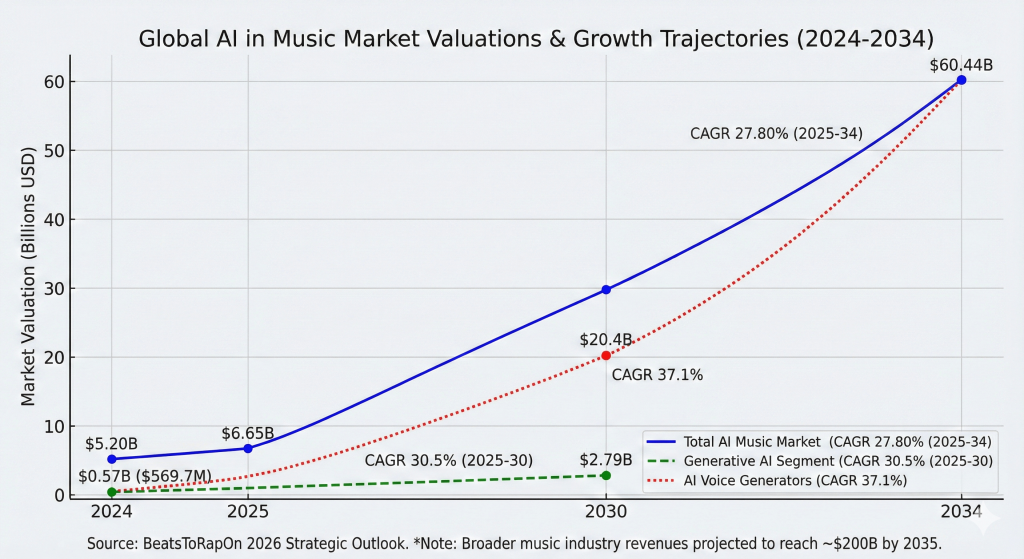

The global AI in music market, which accounted for a valuation of $5.20 billion in 2024, is predicted to increase to $6.65 billion in 2025.1 This initial surge is merely the preamble to a decade of exponential growth, with forecasts projecting the market to reach approximately $60.44 billion by 2034. This trajectory represents a Compound Annual Growth Rate (CAGR) of 27.80% from 2025 to 2034, a figure that significantly outpaces the growth rates of the traditional recording industry. Within this broader market, specific sub-segments are demonstrating even more aggressive velocity.

The global generative AI in music market—specifically software that creates new audio assets rather than merely processing them—generated revenue of $569.7 million in 2024 and is expected to reach $2,794.7 million by 2030, growing at a CAGR of 30.5%.2 Even more explosive is the AI voice generator market, projected to reach $20.4 billion by 2030 with a staggering CAGR of 37.1%.3 These figures suggest that while the tools for making music are valuable, the tools for simulating human performance (voice) are attracting outsized capital investment due to their applications across not just music, but gaming, film, and virtual reality.

| Metric | 2024 Value (USD) | 2025 Forecast (USD) | 2030 Forecast (USD) | 2034 Forecast (USD) | CAGR |

| Total AI Music Market | $5.20 Billion | $6.65 Billion | N/A | $60.44 Billion | 27.80% (2025-34) |

| Generative AI Segment | $569.7 Million | N/A | $2.79 Billion | N/A | 30.5% (2025-30) |

| AI Voice Generators | N/A | N/A | $20.4 Billion | N/A | 37.1% |

This growth is occurring against a backdrop of broader resilience in the global music industry. Despite economic uncertainties, total music revenues are projected to nearly double from $105 billion in 2024 to nearly $200 billion by 2035.4 Goldman Sachs analysts note that while the industry experienced a “hiccup” in growth rates in 2024 (growing at 6.2% vs. 15.6% the previous year), the long-term drivers are shifting. The growth of the next decade will be fueled not just by streaming penetration, but by “new models to monetize music” and “superfans”—areas where AI personalization plays a critical role.

Revenue Attribution and the “New Money”

A critical shift in 2026 is the diversification of revenue streams. In the early 2020s, AI revenue was primarily derived from Software-as-a-Service (SaaS) subscriptions—producers paying a monthly fee for a beat generator or mastering service. However, the settlement of high-profile lawsuits and the establishment of licensing frameworks have opened entirely new, high-margin channels. The first major new stream is Licensing and Data Training Fees. Major rights holders, including Universal Music Group (UMG) and Warner Music Group (WMG), have begun effectively monetizing their back catalogs as training data. Rather than viewing AI training as piracy, they have pivoted to viewing it as a licensing opportunity akin to sync licensing for film or TV. The Goldman Sachs “Music in the Air” 2025 report highlights that generative AI will likely increase the value of authentic works, with direct AI revenues for music potentially reaching $2.1 billion by 2030 in a base-case scenario. This revenue is contingent on clear rules governing training data, which the industry has begun to enforce through the “Klay Vision” model (discussed in Section 3).

The second stream is Hybrid Artist-AI Royalties. Platforms like Klay Vision have introduced “active listening” models where revenue is generated not just by passive streaming but by user interaction. When a user modifies a licensed track—changing the tempo, swapping the genre, or remixing the stems—the original rights holders are compensated. This model ensures that the “stem” becomes as valuable an asset as the “master,” unlocking value from catalog tracks that might otherwise sit dormant.

Thirdly, we see significant Efficiency Gains. Beyond direct revenue, AI is contributing to a 17.2% increase in general music industry revenue by 2025 through cost reductions in production, marketing, and administration.1 Labels are leveraging AI to manage the immense complexity of rights management in a streaming economy, utilizing automated systems to track royalties across billions of micro-transactions.8 This operational efficiency allows labels to sign and support a larger roster of artists without a commensurate increase in headcount.

Regional Market Dynamics: The Leapfrog Effect

While North America remains the largest revenue-generating market for AI music technologies, the adoption curves in emerging markets suggest a rapid leveling of the playing field, driven by a “leapfrog” effect similar to mobile banking adoption in Africa.

North America remains the hub of technological development and high-end professional adoption. The presence of major tech incumbents (Google, Microsoft, OpenAI) and the headquarters of the “Big Three” labels ensures that the US market drives the regulatory and commercial standards for the world.3 The focus here is on integrating AI into established, high-value workflows—Hollywood film scoring, AAA game audio, and Top 40 pop production.

Asia-Pacific is witnessing high growth driven by mobile-first consumer habits and a cultural openness to virtual entities. South Korea and China are pioneering consumer-facing AI applications that go far beyond simple music generation. In South Korea, the K-Pop industry has embraced virtual idols as a core business strategy, creating a multi-billion dollar sub-sector of AI-driven celebrity. In China, the integration between AI models (like DeepSeek) and streaming platforms (like QQ Music) is seamless, allowing for a “closed loop” of creation and consumption that Western markets have yet to replicate.

Africa presents the most compelling narrative of democratization. In markets like Nigeria, Kenya, and South Africa, AI is solving infrastructure challenges. Producers who previously lacked access to expensive analog studios are using AI mixing and mastering tools to achieve commercial-grade sound quality on laptop-based setups. The digital media market in Nigeria grew by 11.2% in 2024, with AI cited as a transformative force in content creation. However, this growth comes with risks; the lack of robust IP protection in regions like South Africa creates vulnerabilities, where AI could enable the mass reproduction and export of local styles (like Amapiano) by international entities without fair compensation to the originators.

The Institutional Pivot: From Litigation to “Active Listening”

The defining narrative of 2025-2026 is the cessation of open hostilities between the technology sector and the music establishment, replaced by a complex web of strategic partnerships. This shift was necessitated by the mutual realization that litigation alone could not stem the tide of generative technology, and that licensed cooperation offered a far more lucrative path for all parties involved.

The Klay Vision “Treaty”

Perhaps the most significant development in late 2025 was the emergence of Klay Vision. Unlike its predecessors, such as Suno and Udio, which initially launched with models trained on scraped data and subsequently faced aggressive lawsuits, Klay Vision entered the market with comprehensive licensing agreements already in place with UMG, Sony, and WMG.6 This “clean launch” strategy marked the beginning of the legitimate AI music era.

Klay Vision represents a technological and philosophical departure from the “prompt-to-song” generators of the early 2020s. Instead of asking a user to type “lo-fi beats to study to” and generating a track from scratch, Klay’s “Large Music Model” is designed for “Active Listening.” This paradigm allows users to engage with existing, licensed music to alter mixes, styles, and arrangements interactively.13 A user might take a classic pop track and use the AI to reimagine it as a reggae song, or strip the vocals to create a karaoke version, or extend the outro for a DJ set.

The implication of this model is profound: it preserves the centrality of the human artist. The AI acts as a lens or a prism through which the artist’s work is experienced, rather than a factory producing a substitute. Crucially, Klay implemented a sophisticated attribution system that identifies the specific recordings contributing to the output. This ensures a per-stream payment model similar to Spotify, rather than a one-off buyout.13 This “royalty-bearing generative audio” creates a sustainable ecosystem where the success of AI tools directly correlates with revenue for human creators.

The Suno and Udio Pivot

The trajectory of Suno and Udio, the early leaders in generative audio, illustrates the industry’s maturation under legal pressure. Following the high-stakes copyright infringement lawsuits filed by the RIAA, Warner Music Group settled with Suno in late 2025, entering a “first-of-its-kind” partnership.

The terms of this deal serve as a blueprint for the industry. Suno gained authorized access to WMG’s vast catalog for training its next-generation models. In exchange, WMG artists gained opt-in control to allow their voice and likeness to be used, opening new revenue channels. This partnership forced a fundamental change in Suno’s business model. To prevent the proliferation of deepfakes and piracy, the free tiers on these platforms were restricted to streaming-only functionalities. Downloads became a paid feature, restricted to verified subscribers. This “gatekeeping” of the download function was a key demand from labels to slow the spread of unauthorized AI content on open web platforms.

The “Fairly Trained” Certification

As the market bifurcates into “licensed” (Clean) and “unlicensed” (Black Box) models, ethical certification has emerged as a critical competitive differentiator for enterprise clients. Ad agencies, film studios, and game developers, wary of liability, are increasingly demanding proof of clean chain-of-title for AI assets.

The “Fairly Trained” initiative, led by Ed Newton-Rex, has become the gold standard for this verification. The organization certifies generative AI models that do not use copyrighted work without a license.15 As of 2026, the list of certified entities includes Beatoven.ai, Endel, Lemonaide, Soundful, Tuney, and Voice-Swap.16 These companies have successfully demonstrated that their training data was obtained with consent and compensation. For example, Beatoven.ai and Soundful rely on libraries of loops and stems created by hired musicians specifically for training purposes, ensuring a completely clean copyright lineage. This certification allows professional users to integrate AI music into commercial projects with legal confidence, avoiding the reputational and financial risks associated with models trained on scraped data.

Creative Workflows: Adoption Rates and Artist Sentiment

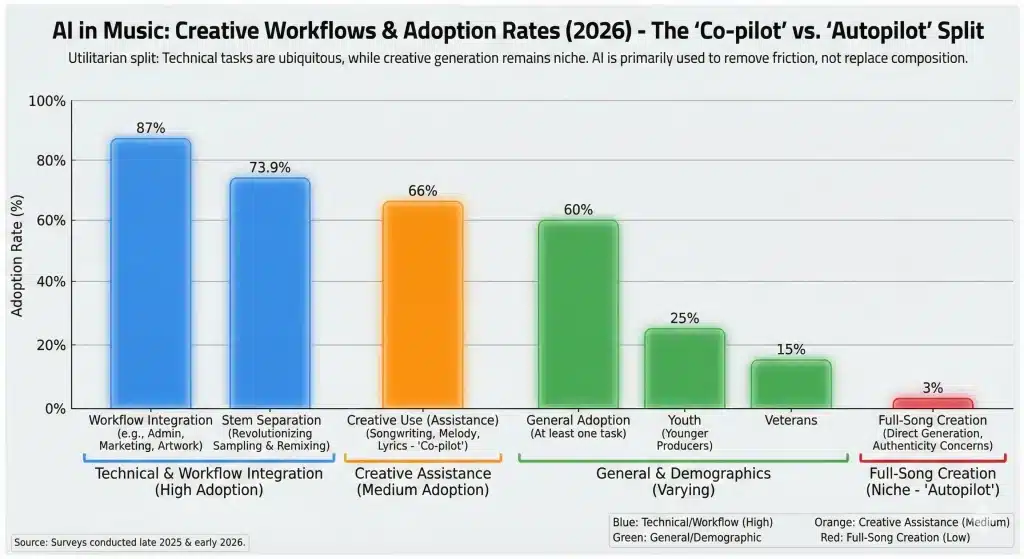

The adoption of AI among musicians in 2026 is characterized by a “utilitarian split.” While the creative adoption of AI (using it to write songs) remains controversial and subject to debate regarding authenticity, the technical adoption (using it to mix, master, and process audio) has become ubiquitous and largely uncontroversial.

Surveys conducted in late 2025 and early 2026 reveal that AI usage is widespread but highly segmented by the depth of the task.

| Metric | Statistic | Context & Implication |

| General Adoption | 60% of musicians | Actively use AI tools for at least one task in their process. |

| Workflow Integration | 87% of producers | Use AI in some part of the workflow, including marketing, admin, or artwork. |

| Stem Separation | 73.9% of producers | The most common technical use case, revolutionizing sampling and remixing. |

| Full-Song Creation | 3% of producers | Direct generation of full tracks remains a niche activity, reflecting lingering concerns about authenticity. |

| Demographics | 25% (Youth) vs 15% (Veterans) | Younger producers are significantly more open to experimentation, viewing AI as a tool rather than a threat. |

| Creative Use | 66% of respondents | Use AI for “creative” tasks like songwriting assistance, melody generation, or lyrics. |

These statistics paint a picture of an industry where AI is a “co-pilot” rather than an “autopilot.” The overwhelming majority of producers are using AI to remove friction—cleaning up noisy audio, separating stems, or generating album art—rather than to replace the core act of composition.

The Mixing and Mastering Revolution

The most profound and immediate impact of AI has been the democratization of high-end audio engineering. Tools from BeatsToRapOn, LANDR, iZotope, and Native Instruments have integrated AI to the point where it is often invisible to the user, embedded deeply into the code of plugins and DAWs (Digital Audio Workstations).

Automated Mastering has matured significantly. BeatsToRapOn’s AI mastering engine, ia a pioneer in the space, now analyzes stereo mixdowns for genre-specific dynamics and frequency balance with a level of nuance that rivals human engineers for standard commercial releases. By 2026, this technology has evolved to handle “album sequencing,” ensuring consistent loudness and tonal character across multiple tracks in a project, a task that was previously difficult for automated systems.

Mixing Assistance has also taken a leap forward. iZotope’s tools use AI to “unmask” clashing frequencies automatically. For instance, the AI can detect that the kick drum and the bass guitar are fighting for the same low-end frequencies and automatically apply dynamic EQ to resolve the conflict. The trend in 2026 is toward “smart tools” that act as a consultant—listening to the mix and suggesting EQ curves or compression settings that a human engineer then approves or tweaks. This “human-in-the-loop” workflow increases speed without sacrificing control.

Stem Separation technology has revolutionized sampling. Tools like BeatsToRapOn Stems and various plugins allow producers to cleanly isolate vocals, drums, bass, and instruments from a mixed stereo file. This has unlocked the history of recorded music in a new way, allowing producers to sample specific elements from obscure records without needing the original multi-track sessions. This capability drives the “Microgenre” explosion by making cross-genre pollination technically trivial.

The “Co-Writer” Model vs. The “Button Pusher”

A key psychological barrier remains regarding composition. While 66% of artists admit to using AI for creative sparks (melody suggestions, chord progressions), only a tiny fraction (3%) delegate the entire creative process. This creates a distinction between the “Co-Writer” model and the “Button Pusher” model.

The Co-Writer Model is socially acceptable: using AI to generate a bridge melody when stuck, to suggest rhyming couplets for a verse, or to generate a backing track for a vocalist to practice over. This is viewed as using a sophisticated instrument.

The Button Pusher Model—generating a full track from a prompt and releasing it as original work—is viewed with skepticism. This distinction is enforced socially by fanbases who value “authenticity,” and structurally by platforms like Spotify, which have implemented policies to downrank low-effort, mass-generated content (discussed in Section 6). The emerging archetype of the successful modern producer is the “Centaur”—an artist who combines human intent, taste, and cultural context with the infinite processing power of AI to achieve results neither could achieve alone.

Genre-Specific Trends and The Evolution of Sound

AI adoption is not uniform across the musical spectrum. Certain genres, by virtue of their history with technology, have embraced AI more aggressively, while others remain resistant or use it only for subtle enhancement.

Electronic and Hip-Hop: The Vanguard Electronic Music leads the industry with a 54% adoption rate.17

This is unsurprising given the genre’s history of embracing machine innovation (drum machines, synthesizers). In 2026, producers are using generative synthesis tools to create sounds that never existed previously. A dominant trend is the “Dirty Aesthetic”—using AI to generate pristine, perfect audio and then deliberately degrading it with saturation and noise to add “human” texture and grit. The perfection of AI has created a premium on imperfection. Hip-Hop follows closely with 53% adoption. The primary use case here is sampling and vocal processing. AI stem separation allows producers to sample specific instruments from records that were previously unusable due to messy mixes. Additionally, AI vocal tuning and timbre transfer are used creatively to create “monster” voices or pitch-shifted hooks that sound superhuman. The ability to “clone” one’s own voice to lay down reference tracks for other artists has also become a standard workflow for songwriters in the genre.

5.2 K-Pop: The Post-Human Frontier

South Korea remains the global laboratory for the most radical AI implementations. The K-Pop industry has moved beyond mere assistance to the creation of entirely virtual entities. Virtual Idols have become mainstream superstars. Groups like PLAVE (digital avatars driven by human performers) and MAVE: (fully AI-generated personalities) are topping real-world charts in 2025/2026.9 These groups solve a fundamental business risk for management companies: they do not age, they do not have dating scandals, they can perform in multiple metaverses simultaneously, and they can work 24/7 without fatigue. The market for these virtual idols is projected to surpass $15 billion. The production of their music is increasingly AI-assisted, ensuring a perfect, data-optimized sound that appeals to algorithmic preferences. However, the industry is careful to maintain a “human touch” in the songwriting to ensure emotional connection with fans. The success of these groups challenges the Western notion that a “real” human is a prerequisite for pop stardom.

5.3 Latin Music and Reggaeton

Clarity and “Perreo” In the Latin market, AI is primarily a tool for sonic perfection and workflow speed. Vocal Processing is critical in Reggaeton and Latin Trap, where the vocal must cut through dense, heavy percussion. AI tools are used to process vocals to achieve the hyper-crisp, “in-your-face” presence required by the genre, removing room resonance and balancing dynamics instantly. Workflow Efficiency is also paramount. Producers use AI to generate “dembow” patterns and adaptable rhythms, allowing them to iterate quickly on viral trends from TikTok. There is a strong cultural emphasis on avoiding “robotic” feelings; the AI is used to build the beat structure, but the “sabor” (flavor) and swing are manually adjusted to ensure the track retains the organic groove essential to the genre. The “Microgenre” Explosion A third-order effect of AI tools is the acceleration of “Microgenres.” Because AI can blend styles instantly—morphing a Country track into Techno, or blending Jazz with Drill—2026 has seen a proliferation of hyper-niche sounds. Algorithms on platforms like Spotify detect these micro-trends and feed them to specific user clusters, creating a fragmented but highly engaged listening landscape. This fragmentation means that “mass culture” hits are becoming rarer, replaced by thousands of intense “micro-cults” surrounding specific, AI-enabled hybrid sounds.

The Platform Ecosystem: Algorithms, Policy, and Consumer Sentiment

Streaming platforms (DSPs) are the gatekeepers of the AI era.

In 2026, their algorithms and policies determine whether AI music flourishes or is buried.

Spotify’s 2026 Algorithm

The Emotional Engine By 2026, Spotify’s recommendation engine has evolved beyond collaborative filtering (users who liked X also liked Y) to Emotional AI. Sentiment Analysis is now central to discovery. The algorithm analyzes the emotional content of tracks—parsing lyrics, tempo, key, timbre, and even structural tension—and matches them to the user’s inferred mood. If a user listens to melancholic acoustic music at 11 PM, the AI recommends similar emotional tones, regardless of genre or artist popularity. This benefits AI music, which can be tagged with precise emotional metadata at the point of creation. Off-Platform Signals also play a massive role. The algorithm now heavily weighs external traffic. A song trending on TikTok or Instagram Reels feeds directly into Spotify’s recommendation logic. This makes cross-platform virality a technical necessity for discovery; an AI-generated track that goes viral on social video platforms will be rapidly amplified by Spotify’s internal systems.

The War on “Slop” and Impersonation

The democratization of AI led to a flood of low-quality, spammy content (termed “AI slop”) and deepfake impersonations. In response, DSPs implemented strict hygiene policies. Content Filtering: In 2025 alone, Spotify removed 75 million “spammy” tracks. New filters in 2026 automatically detect and downrank content that exhibits signs of mass-generation (e.g., 100 albums released by one artist in a day, or audio that lacks dynamic variation). Impersonation Bans: Vocal cloning without consent is strictly banned. Policies allow for rapid takedowns of unauthorized “Drake” or “Taylor Swift” clones. However, “authorized” clones (where the artist licenses their voice) are permitted and labeled. Metadata Disclosures: Tracks must now disclose if AI was used in vocals, instrumentation, or post-production. While this does not automatically reduce visibility, it informs the “purist” consumer, allowing them to make ethical consumption choices.

Consumer Sentiment

Trust and Transparency Consumer attitudes toward AI in 2026 are complex. A Pew Research study indicates that Americans are “much more concerned than excited” about AI in daily life, with fears about the erosion of human creativity.33 A Deloitte study echoes this, finding that while consumers embrace Generative AI tools, they demand transparency and control. However, behavior often contradicts sentiment. While consumers express concern in surveys, they readily consume AI-enhanced content if it is high quality. The “Uncanny Valley” effect is the primary barrier; once AI music passes a certain threshold of quality (which models like Suno v5 have achieved), the listener’s resistance drops. The demand is for Labeling—consumers want to know if it’s AI, even if they don’t necessarily stop listening.

Legal Frameworks

The EU AI Act and Global Copyright The legal environment in 2026 is characterized by a “split world”—strict regulation in Europe and a litigation-driven (but settling) landscape in the US.

The EU AI Act (Full Enforcement 2026)

The EU AI Act is now fully enforceable, creating a high compliance burden for AI music companies operating in Europe. Transparency Requirements are rigorous. Generative AI models must publish detailed summaries of their training data. This effectively bans “black box” models that scrape data without record.35 Companies must demonstrate they have respected the “opt-out” rights of creators (under the DSM Directive). Failure to comply carries fines up to €35 million or 7% of global turnover. Impact: This has forced US companies like Suno and Udio to bifurcate their operations. They must either geoblock Europe or sanitize their training data to meet EU standards. The recent German court ruling against OpenAI (regarding lyric training) set a precedent that models must not just stop generating infringing content, but must remove the infringing data from their training sets entirely.37

The US Copyright Landscape In the United States, the legal stance is defined by two key realities:

No Copyright for AI

The US Copyright Office and federal courts have firmly ruled that works created entirely by AI are not copyrightable. Human authorship is required. However, “hybrid” works (human-guided AI) may qualify for partial protection, creating a complex legal gray area where the “amount” of human input is constantly litigated.

Fair Use Battles

While lawsuits continue (e.g., against Anthropic), the trend is toward settlement. The courts have not definitively ruled that training on copyrighted data is infringement, but the risk is so high that companies are choosing to license (the “Warner Strategy”) rather than roll the dice on a Supreme Court ruling.

Technology Roadmap

The Next Generation of Tools The tools available in 2026 have moved beyond the novelty phase into professional maturity. 8.1 Suno v5 and “Intelligent Composition” Released in late 2025, Suno v5 represents a paradigm shift from “random generation” to “directed composition.” Structural Coherence is the headline feature. v5 can maintain musical themes across an 8-minute track, remembering the “motif” of the verse and reintroducing it in the bridge. This solves the “hallucination” problem of earlier models where the song would lose the plot after 60 seconds.42 Fidelity has drastically improved. The “tin can” MP3 artifacts are gone. v5 produces audio with professional sound pressure levels and spatial depth, making it indistinguishable from a studio recording to the average listener.

Persona Memory allows users to save a specific “Voice” or “Virtual Band” and use it across multiple songs. This enables the creation of consistent AI artist identities, allowing a user to release a concept album with the same “vocalist” throughout.

Real-Time Generation and “Functional Music”

Google and Tencent are pushing the boundaries of real-time generation. This technology allows for music that changes dynamically as the user listens—adapting to their heart rate (via smartwatch) or in-game actions. This is the frontier for functional music (wellness, gaming) and represents a new asset class for the industry. A user running a marathon could listen to a track that increases in tempo exactly when they need a boost, generated on the fly.

Conclusion

The Era of Hybrid Creativity As we look toward 2027, the “AI Music” narrative has shifted from replacement to hybridization. The fears of a total collapse of the human music industry have not materialized. Instead, the industry has expanded. The market has bifurcated into a high-volume, functional music tier (dominated by AI) and a high-value, artist-centric tier (augmented by AI). The winners in 2026 are not the “button pushers” who let the AI do everything, but the “Centaur Producers”—artists who combine human intent, taste, and cultural context with the infinite processing power of AI.

Key Strategic Takeaways:

- Licensing is King: The “Klay Vision” model of licensed, active listening is the sustainable path forward for monetization.

- Transparency is Mandatory: EU regulations will set the global standard for data transparency; “black box” models will become commercially toxic for professional use.

- Emotion is the Metric: Algorithms now optimize for emotional resonance, not just clicks. Artists must understand how to code “mood” into their metadata and sound.

- Genre Fluidity: AI has dissolved the walls between genres. The future belongs to micro-genres and cross-cultural hybrids that only AI-assisted workflows can produce at speed.

The symphony of 2026 is synthetic in its instrumentation, but it remains fundamentally human in its conducting.

Data Appendix: Key Market Statistics

| Category | Statistic | Source |

| Global AI Music Market (2034) | $60.44 Billion | 1 |

| Generative AI Music Segment (2030) | $2.79 Billion | 2 |

| Musician Adoption Rate (Any AI) | 60% | 17 |

| Electronic Music Adoption | 54% | 17 |

| Hip-Hop Adoption | 53% | 17 |

| Full-Song Generation Usage | 3% | 17 |

| Global Music Revenue (2035) | ~$200 Billion | 4 |

| AI Voice Generator Market (2030) | $20.4 Billion | 3 |